Jacking Into the Machine: Using AI Without Selling Your Soul

By Andrew Lowe, TalaTek Senior Information Security Consultant

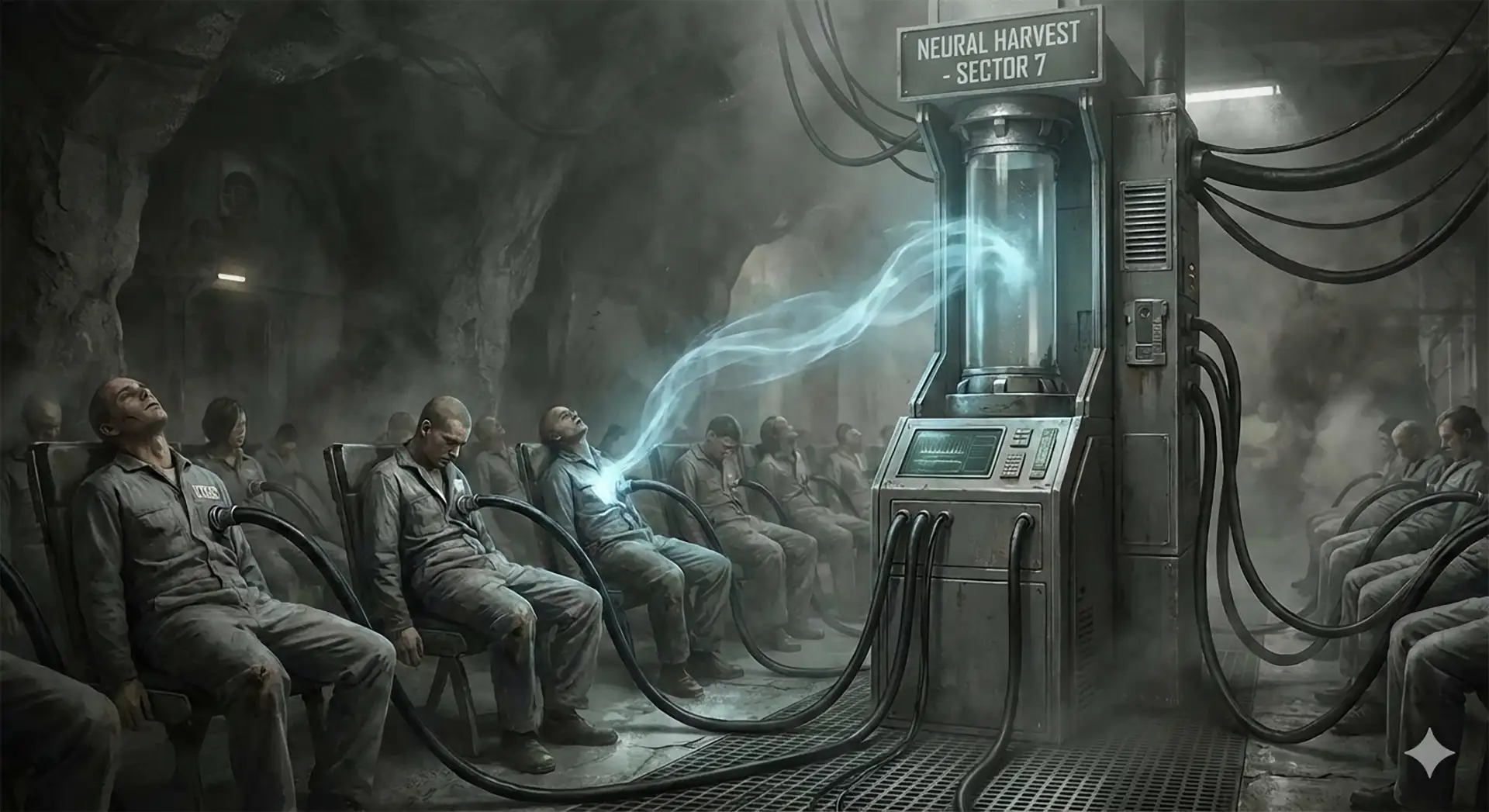

The new AI revolution offers godlike power at your fingertips. Language models write code, generate art, analyze data with inhuman speed. But these aren’t tools, they’re corporate black boxes that devour your inputs and spit out digestible profits. Using AI safely means understanding you’re interfacing with a system designed to extract value from every interaction. Jack in carefully or get flatlined fast.

Understanding the Data Harvest

When you feed prompts into ChatGPT, Claude, Midjourney, or any cloud AI, your inputs get processed, logged, and potentially used for training future models. Companies claim anonymization, but context destroys anonymity. A conversation about your specific work project or medical condition remains identifiable even with your name stripped.

Read the privacy policies like you’re planning a corporate data heist—because you need to know the security parameters. Some services let you opt out of training data collection. OpenAI allows disabling chat history and training for ChatGPT. Anthropic offers similar controls for Claude. Enable these immediately. Verify whether conversations sync across devices through cloud storage; each sync is another exposure point.

Never Transmit Sensitive Intel

Treat AI chatbots like unsecured public terminals. Never input passwords, API keys, social security numbers, financial credentials, or medical records. Don’t paste confidential work documents, proprietary code, or personal communications. Once transmitted, that data is compromised. You’ve lost operational control.

For work scenarios requiring AI assistance, sanitize your data first. Replace real names with codenames, remove specific dates and locations, generalize identifying details. If you need document analysis, create a redacted summary that preserves utility without exposing confidential elements.

Running Local: True Air-Gapped AI

Deploy AI models locally when possible. Tools such as Ollama and LM Studio let you run capable language models on your own hardware. Your data never leaves your rig. Zero corporate telemetry. Performance won’t match cloud behemoths, but the privacy trade off is worth it for sensitive operations.

For image generation, Stable Diffusion runs locally with reasonable hardware specs. This prevents your creative prompts from getting logged by corporate services. Local models also bypass content filters that restrict legitimate creative work.

Operational Security: Minimum Information Disclosure

Only provide minimum necessary information. Need writing feedback? Share the relevant section, not your entire manuscript. Requesting code assistance? Provide the problematic function, not your whole application with business logic exposed.

If possible, create separate accounts for different operational contexts. Personal queries on one account, work-related tasks on another. This limits how much any single provider can correlate about you across contexts.

Corporate vs. Consumer Services

Enterprise AI services often include contractual privacy protections that consumer versions lack. If your employer provides AI tool access, understand the privacy agreement. Business contracts typically prohibit using customer data for training and include data residency guarantees.

Free consumer AI services maximize data extraction to improve products and target advertising. The model you use for casual experiments shouldn’t be the same one handling anything sensitive.

Anonymous Access Protocols

Some AI services allow limited anonymous access. Anthropic’s Claude can be used without account registration in certain contexts. DuckDuckGo offers anonymous access to various AI models. These options prevent long-term profile building, though individual sessions remain logged.

Use privacy-focused email addresses for AI account registration. Services such as SimpleLogin or AnonAddy create forwarding addresses masking your real email. Pay with privacy-protecting methods when services require payment.

Regular Data Purges

Regularly purge conversation history on AI platforms. Even with training opt-out enabled, providers retain conversations for varying periods. Delete old chats containing anything you wouldn’t want exposed in a data breach.

Some services let you export your data. Download your history periodically for personal archives, then delete it from provider servers. This gives you the benefit of records without long-term corporate exposure.

Trust No One

Companies change policies. Databases get breached. Subpoenas get served. No assurance is permanent. The safest operational assumption is that your data will eventually be compromised. If you wouldn’t want something read in court or leaked to competitors, don’t input it into AI systems.

The Reality Check

AI capabilities will continue evolving, but privacy protections lag far behind. The corps building these systems profit from your data. The state wants access for surveillance. Treating these tools as powerful but inherently compromised keeps you operational as the technology advances.

You’re not refusing AI. You’re using it on your terms. You’re maintaining operational security while leveraging capabilities the previous generation could only dream of. That’s the netrunner way: exploit the system without becoming part of it.

Stay sharp, stay paranoid (only a little), stay ahead of the curve.